Personal AI Infrastructure

Open-source scaffolding for building your own AI-powered operating system

The best AI in the world should be available to everyone

Open-source scaffolding for building your own AI-powered operating system

The best AI in the world should be available to everyone

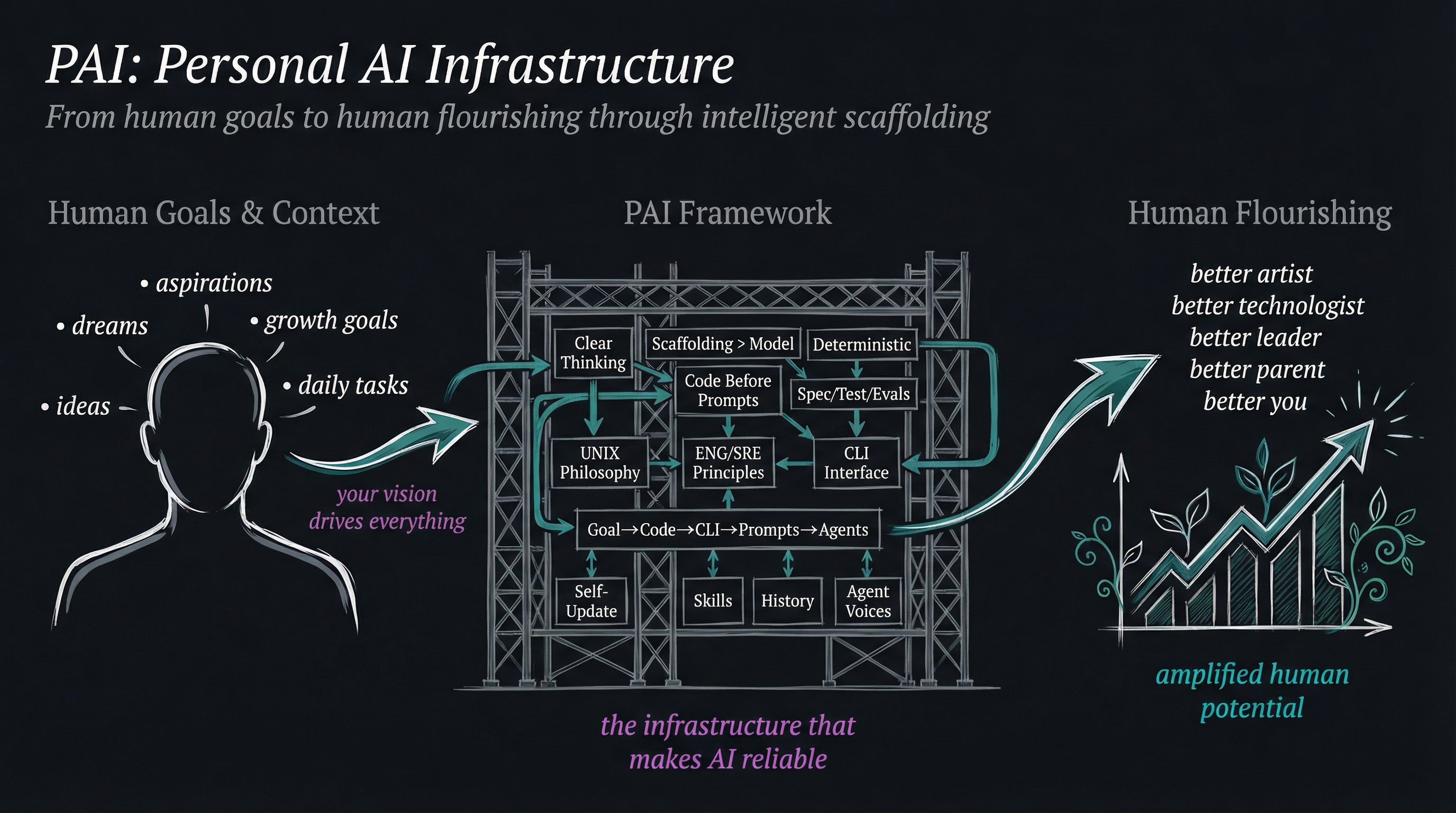

PAI 1.0.0 represents a fundamental shift: from a monolithic "mirror my exact system" approach to a modular, package-based architecture that democratizes AI infrastructure.

The Jenga Tower Effect

Early PAI was a monolithic system where everything depended on everything else. Want to use just one skill? Too bad—you had to clone the entire infrastructure.

Updates broke workflows. Dependencies tangled. The system became fragile, like a Jenga tower where pulling one block could collapse everything.

Modular Packages

PAI 1.0.0 introduces self-contained packages. Each package is a complete unit with its own dependencies, documentation, and installation scripts.

Install only what you need. Update packages independently. Build your own packages. Share them with the community. The infrastructure serves you—not the other way around.

Anyone can create and share packages without understanding the entire PAI architecture.

Packages work across any AI platform. Your skills, your agents, your infrastructure.

Each package is self-contained. Update one without breaking others.

248 Fabric patterns run natively in your context. No CLI spawning required.

PAI is built on four core primitives that work together to create a powerful, flexible AI infrastructure tailored to your needs.

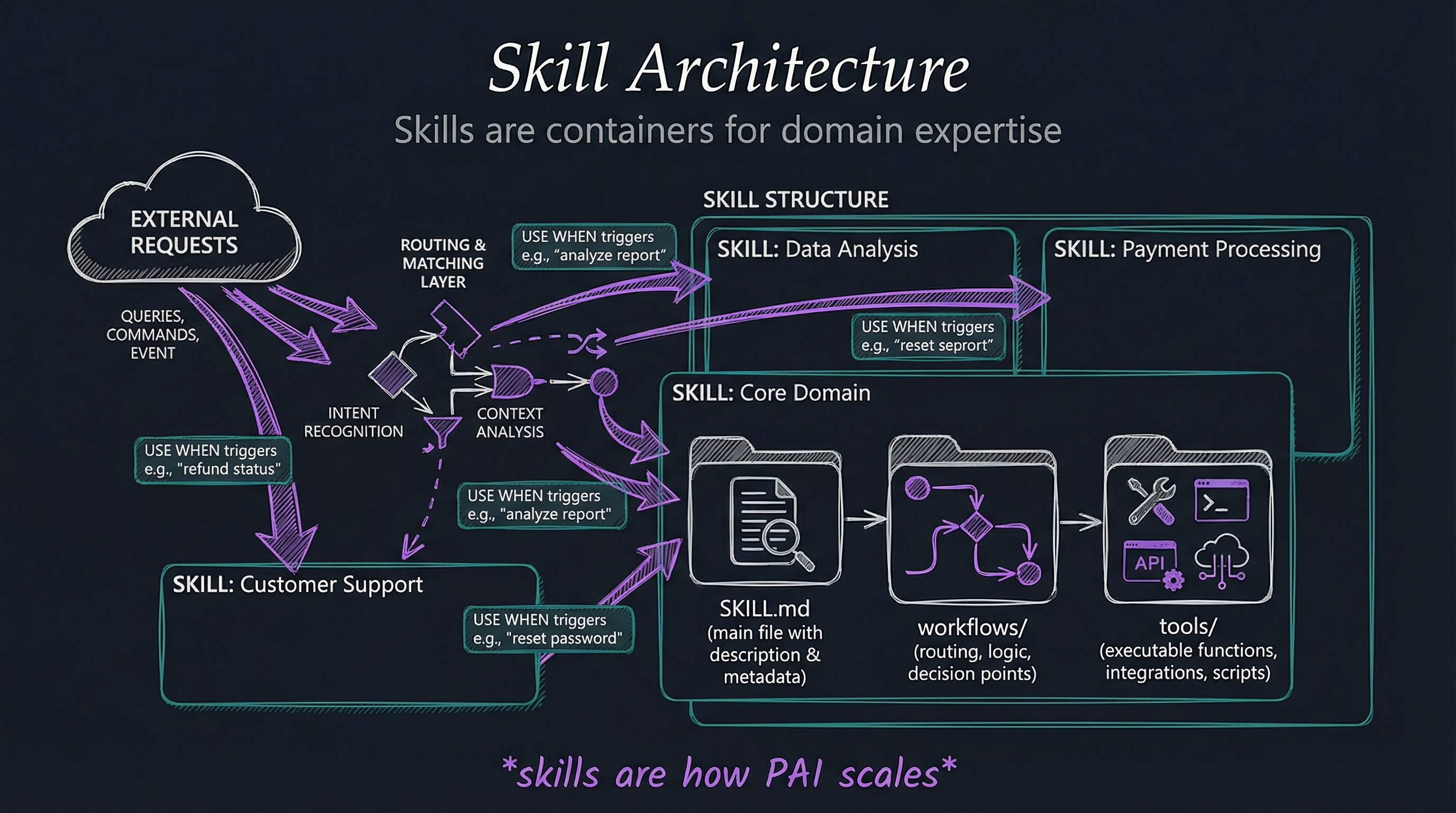

Modular capabilities you can install and extend

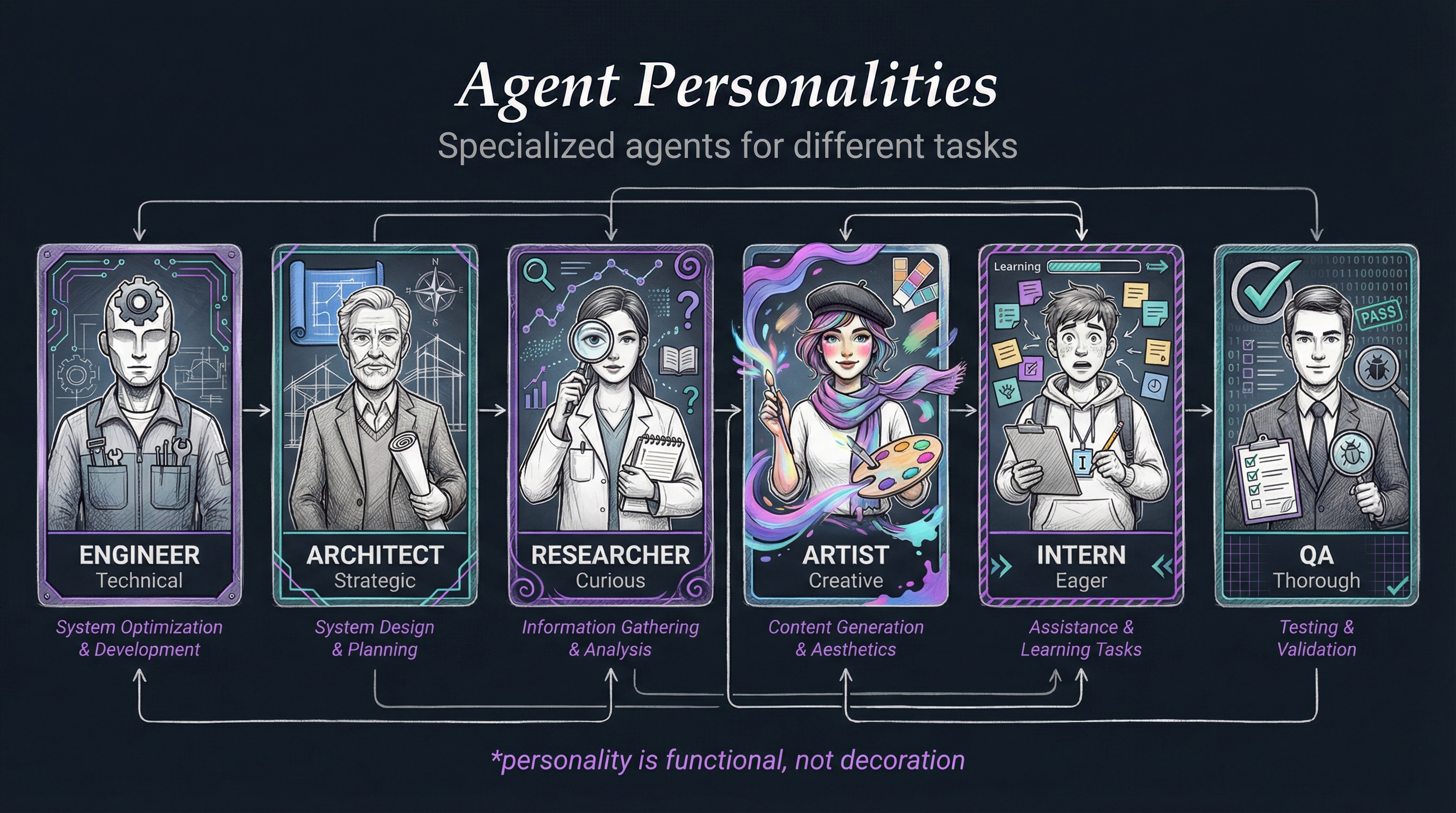

Specialized AI personas with unique expertise

Event-driven automation and workflows

Unified Observation and Context System (UOCS)

These principles define how to build reliable, scalable, and maintainable AI infrastructure. Click any principle to explore it in depth.

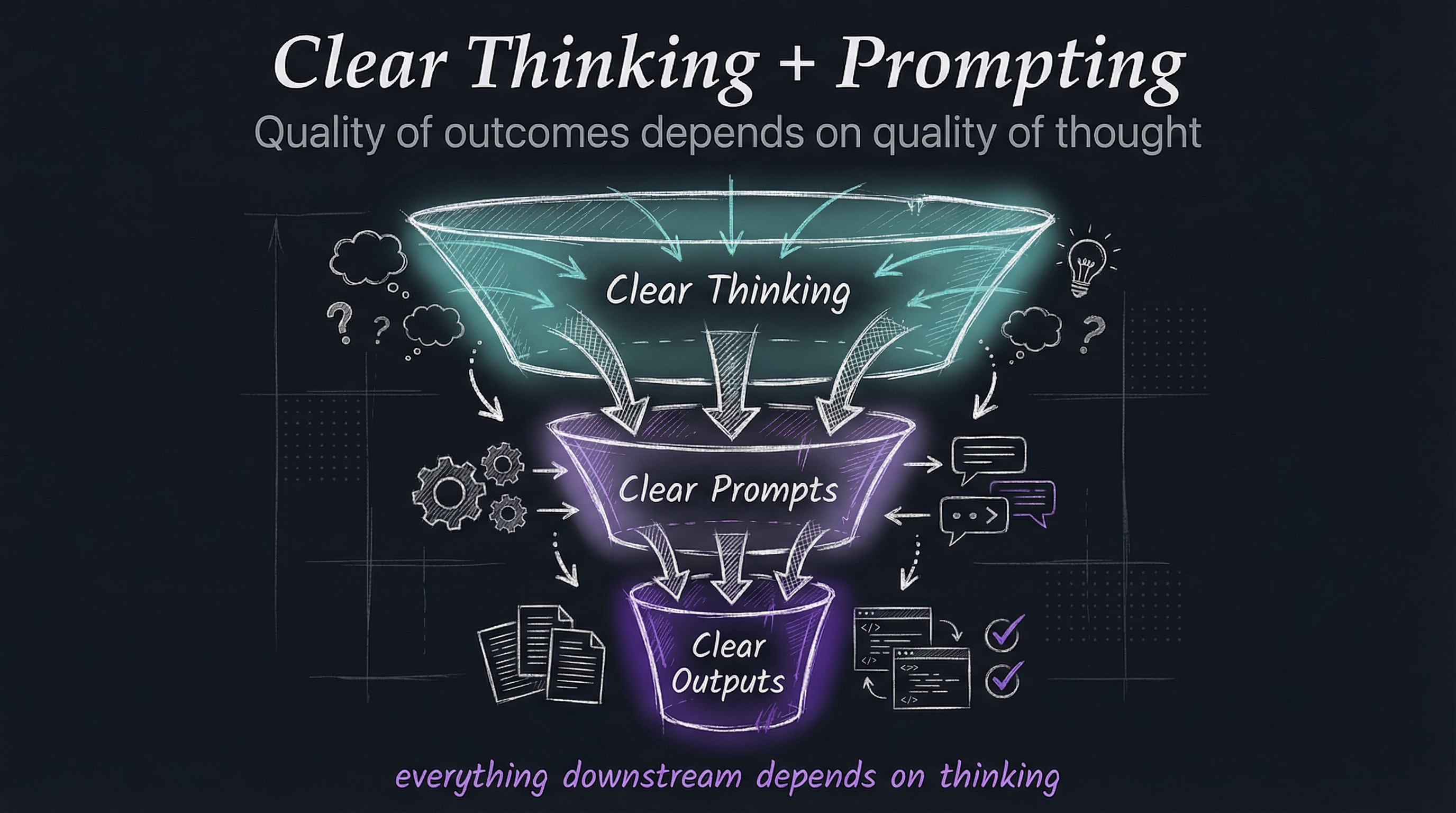

The quality of outcomes depends on the quality of thinking and prompts. Clear thinking comes before code.

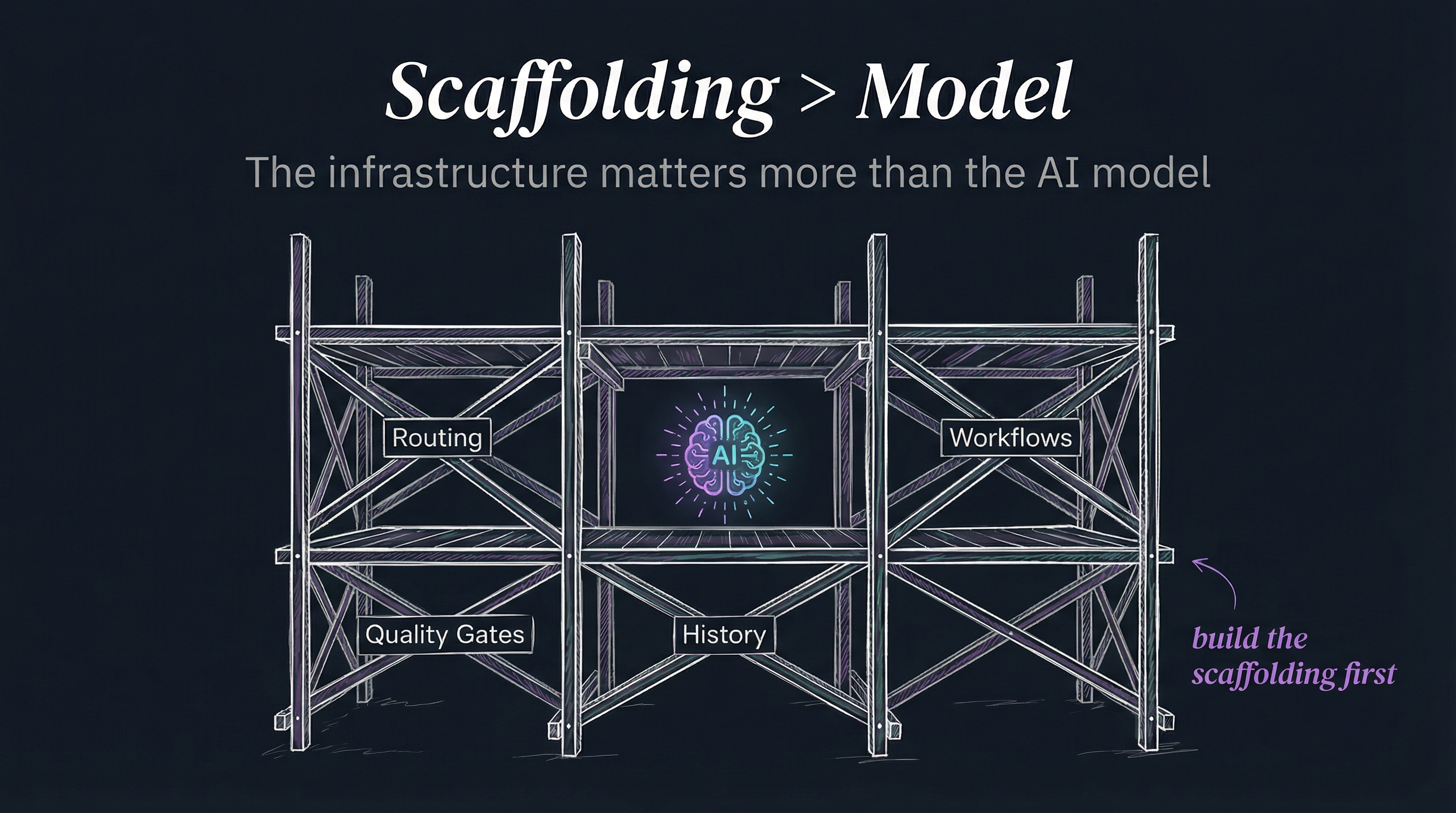

System architecture matters more than the underlying AI model. Structure outperforms raw power.

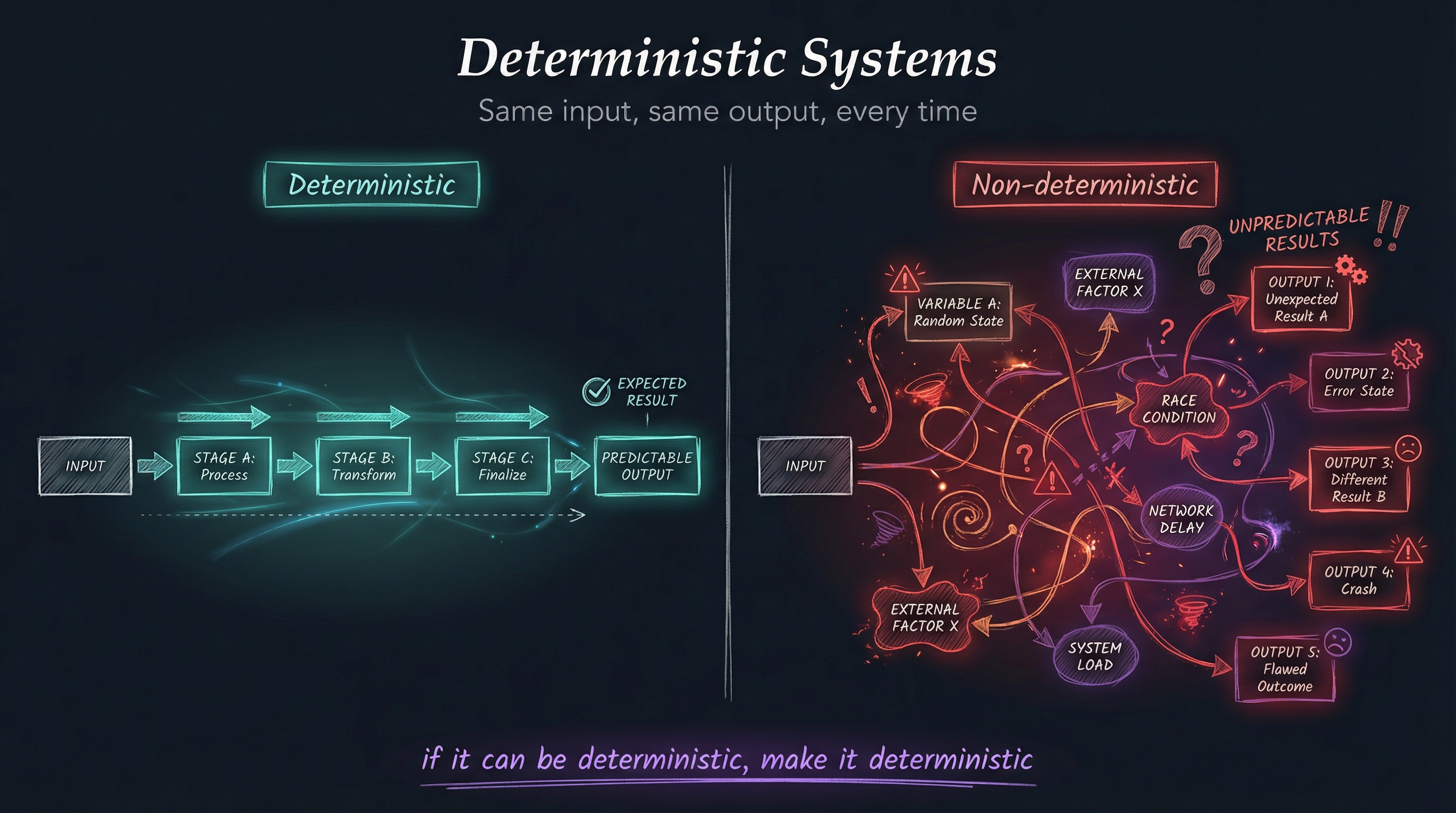

Favor predictable, repeatable outcomes over flexibility. Same input → Same output. Always.

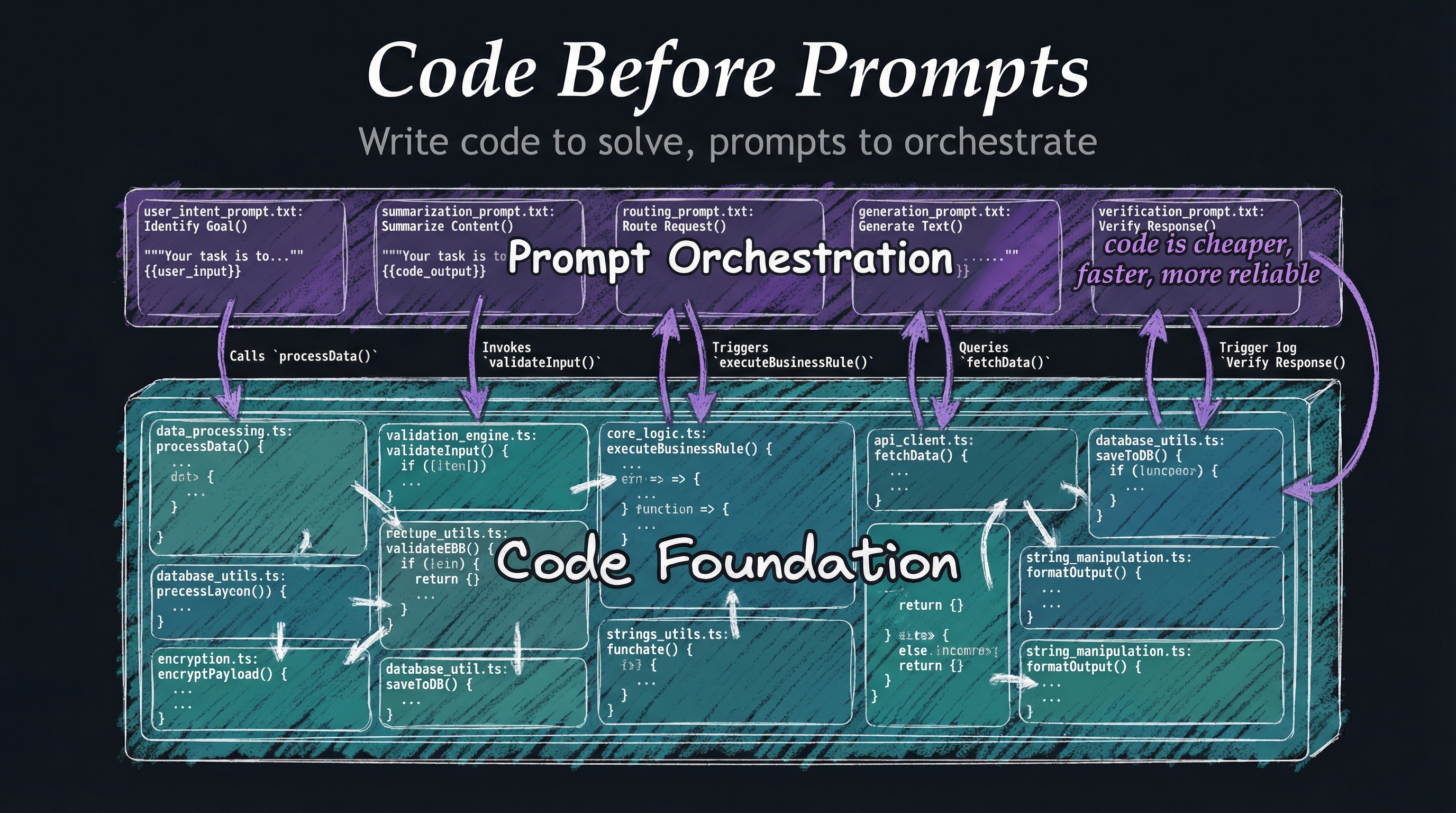

Solve problems with code first. Only use prompts when code cannot handle the task.

Define specifications, write tests, and create evaluations before implementation.

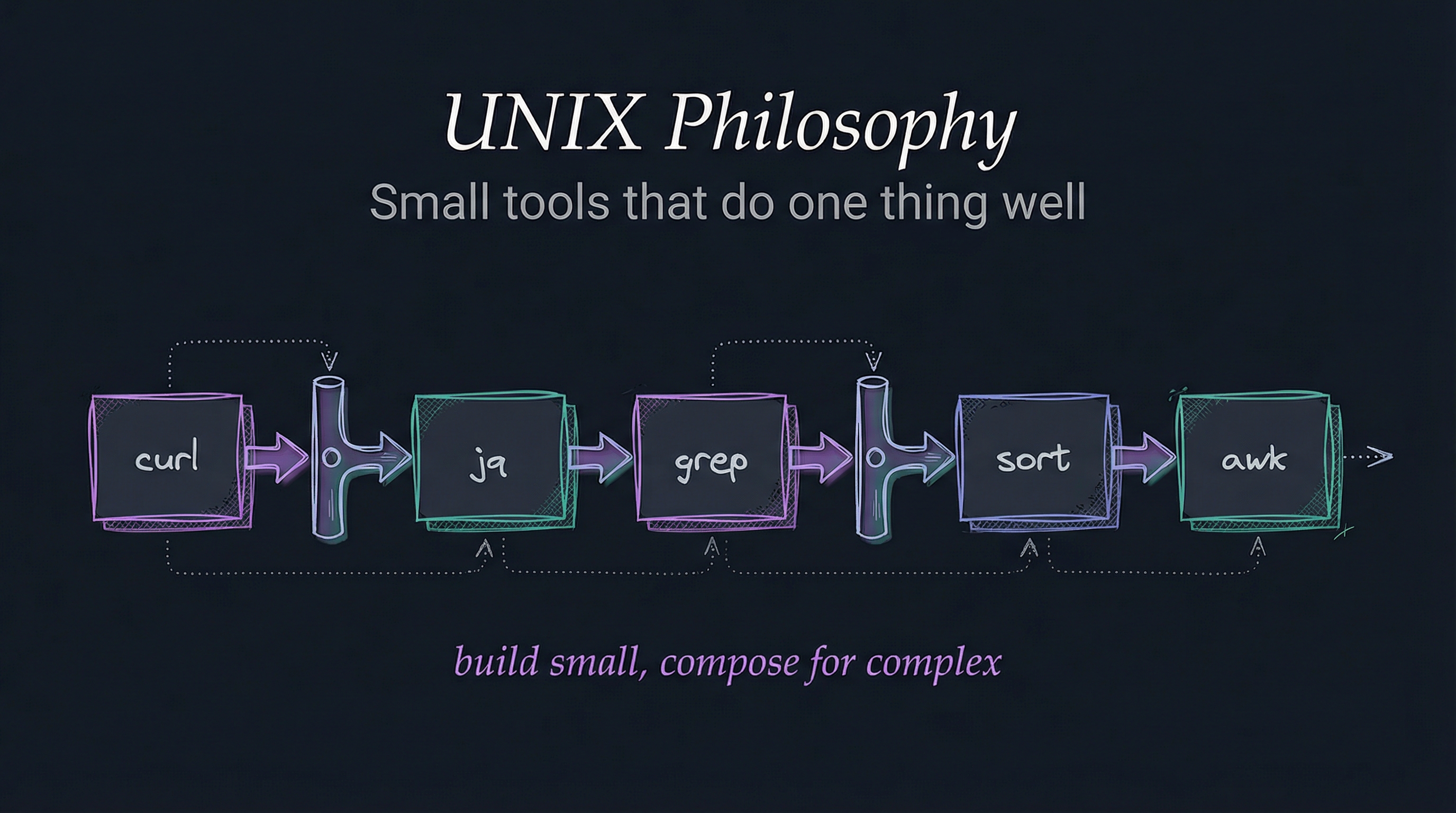

Do one thing well. Compose small, focused tools into powerful systems.

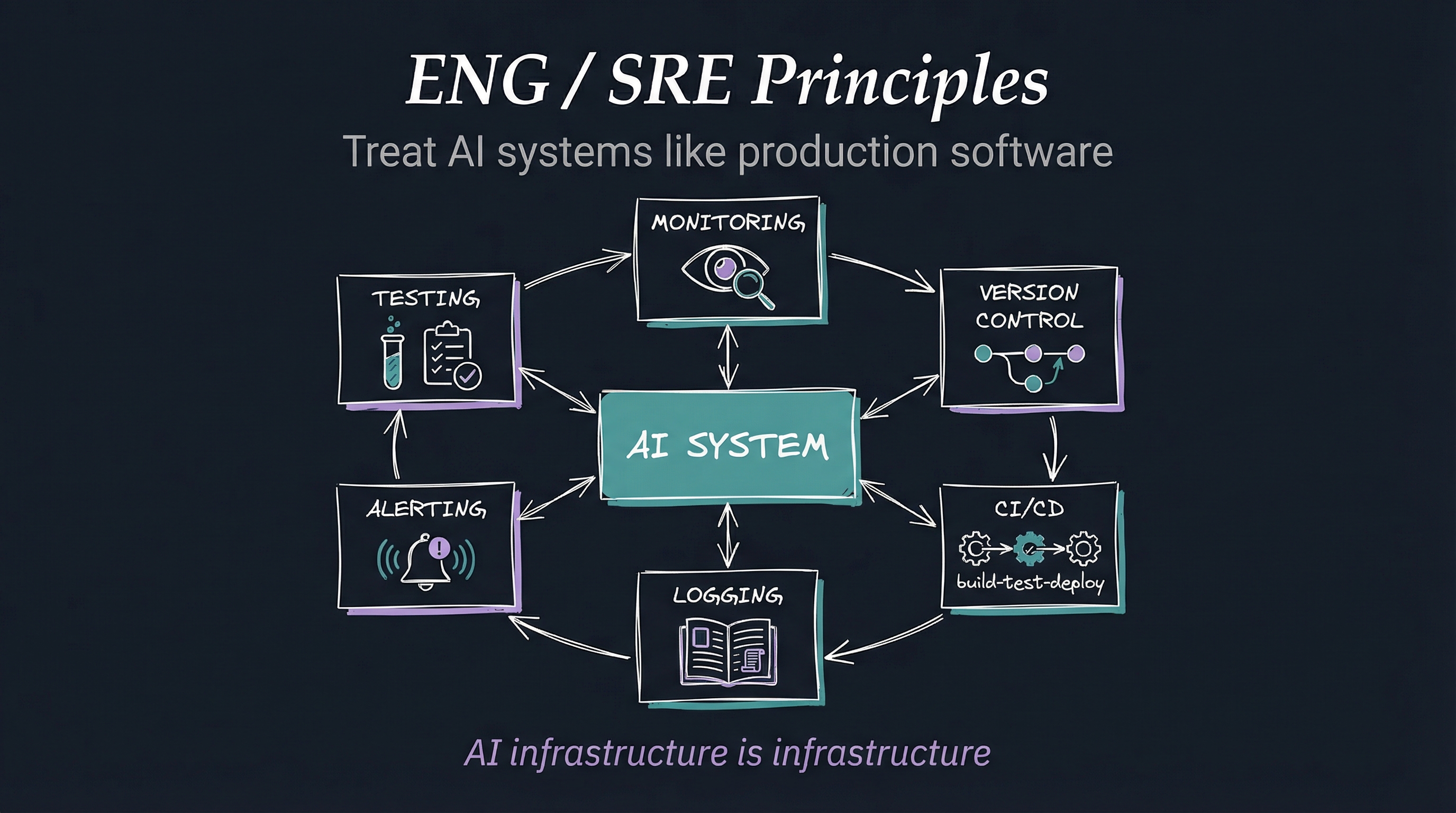

Build for reliability, observability, and operability from day one.

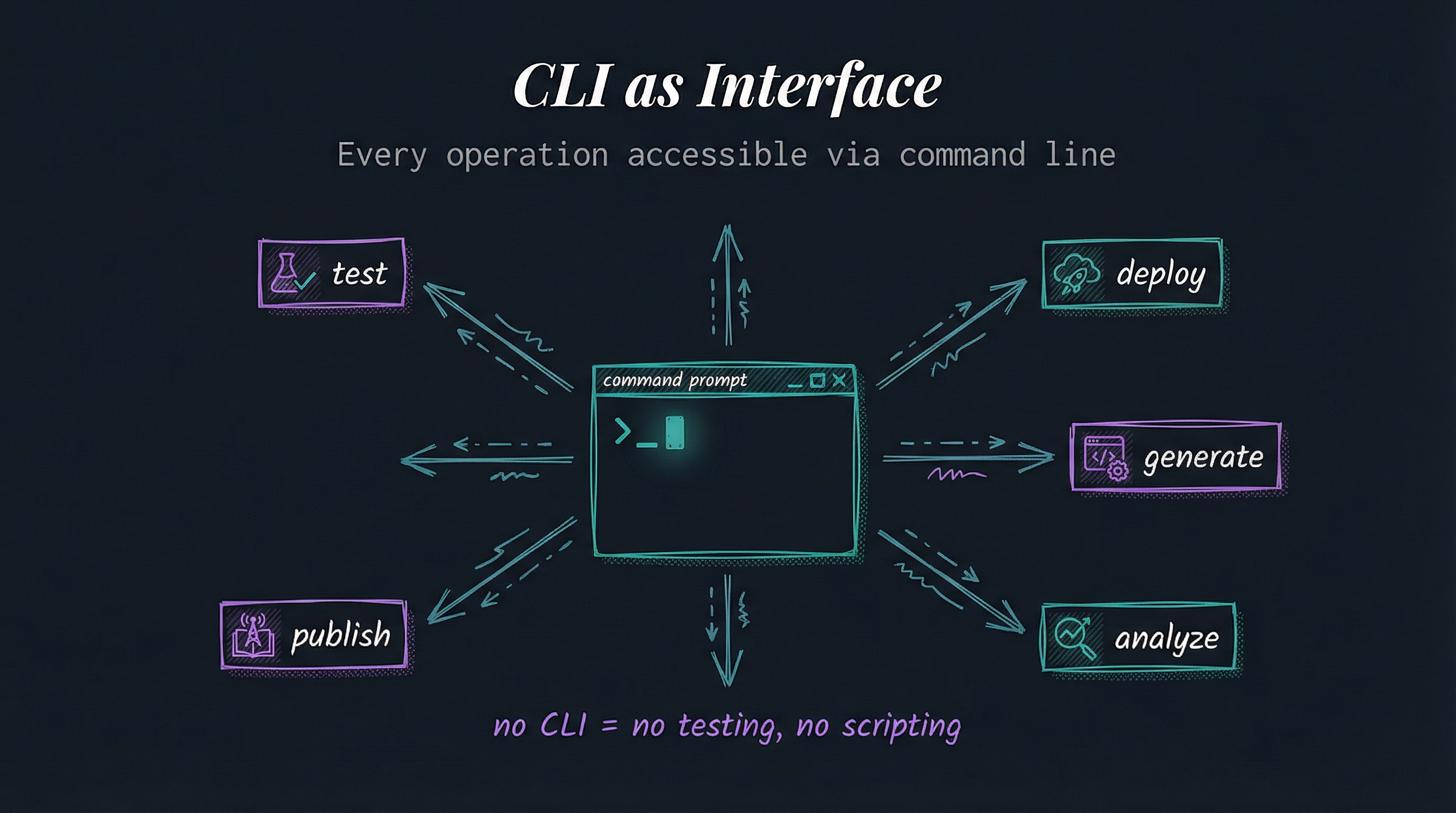

Command-line interfaces are universal, scriptable, and composable.

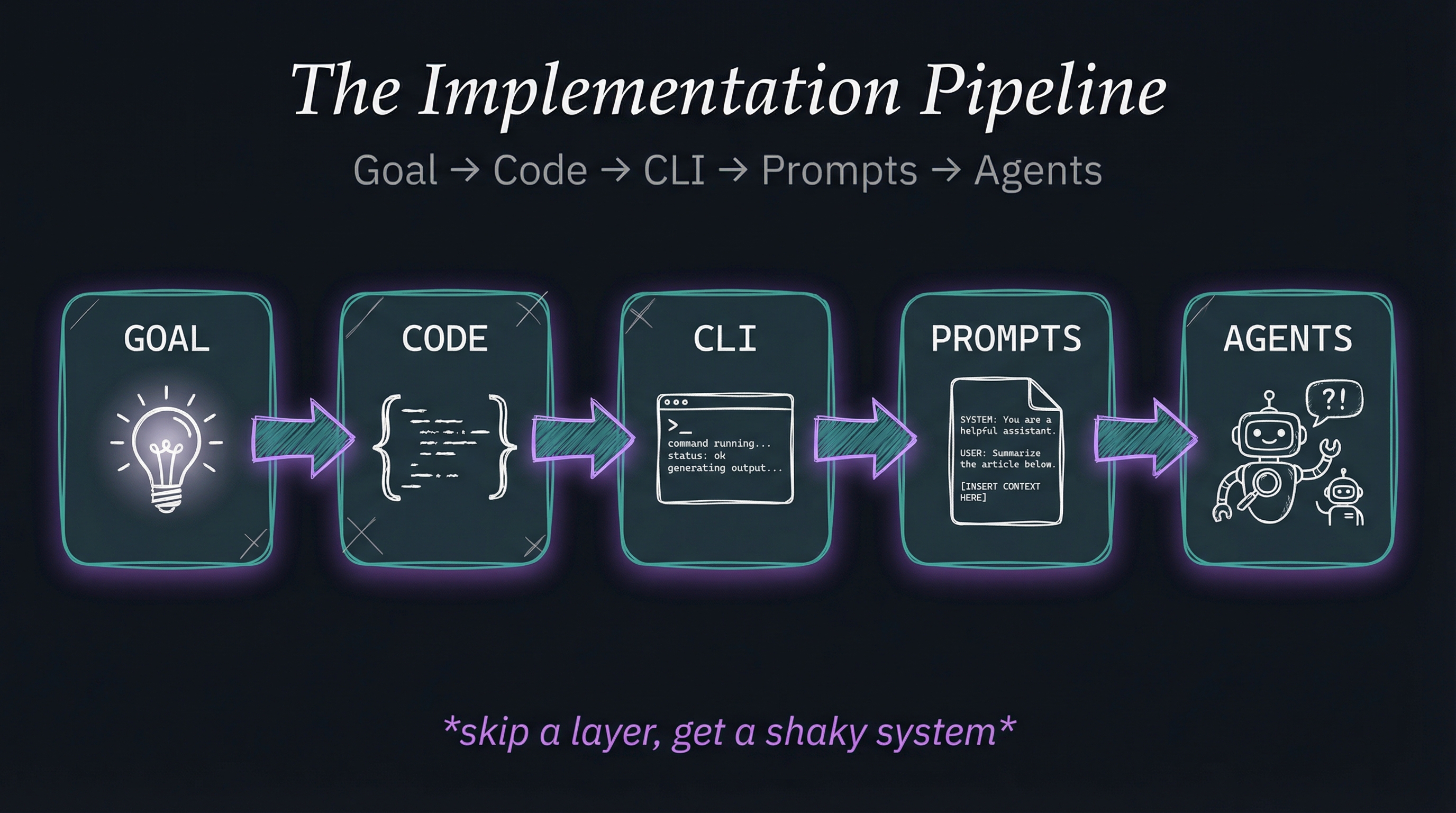

Build from concrete to abstract: goals become code, code exposes CLIs, CLIs enable prompts, prompts power agents.

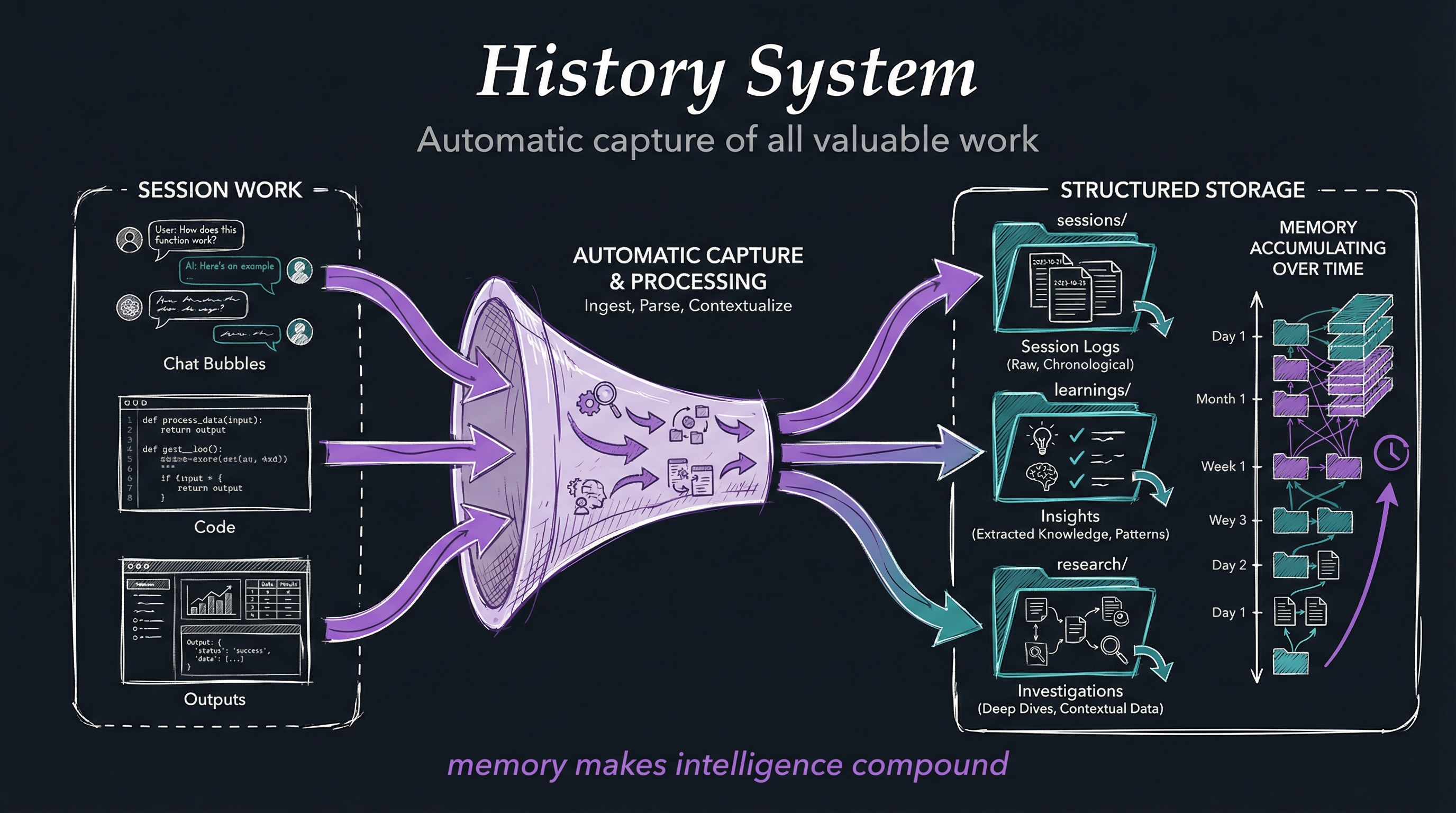

The system should improve itself. Capture learnings and evolve automatically.

Skills are the unit of capability. Manage them like code packages.

Context is everything. Automatically capture, organize, and surface relevant history.

Different tasks need different personas. Customize agent behavior and expertise.

PAI is built on a layered architecture where each component works together to create a powerful, extensible AI infrastructure.

Intelligent routing system directs requests to the appropriate skill based on patterns and keywords.

Parallel agent execution with context inheritance and specialized model selection per agent.

Lifecycle hooks capture session start/end, skill execution, and system events for automation.

UOCS automatically documents all work with structured metadata for searchable context.

Install PAI in minutes. Choose your platform and follow the steps below.

git clone https://github.com/danielmiessler/PAI.git ~/PAI[ -d ~/.claude ] && mv ~/.claude ~/.claude.backup

ln -s ~/PAI/.claude ~/.claude~/.claude/Tools/setup/bootstrap.shcp ~/.claude/.env.example ~/.claude/.env

nano ~/.claude/.envsource ~/.zshrc # Load PAI environment

claudeNote: The setup wizard will configure your name, email, AI assistant name, and environment variables to customize PAI to your environment.

PAI is built by and for the community. Connect with other builders, share your packages, and help shape the future of personal AI infrastructure.

The best AI in the world should be available to everyone. Join us in building the future of personal AI systems.